Update 7: Fixed Camera Rendering

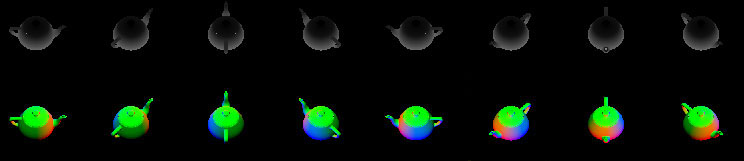

I’ve had this idea for a fixed camera rendering system in the back of my mind for a little while. I thought I would put together a proof of concept. Basically the idea is that the world can only be viewed in isometric and top down oblique perspective, but the game is still a fully 3d world. The idea spawned when I was watching this video by Roller Coaster Tycoon 1&2 artist Simon Foster. He explains the process for creating the graphics for both games. He creates game models in 3d studio max and renders them out at different angles. Then he would manually put them on a giant sprite sheet ready for use in the game. It got me thinking, what if I could render 3d models to a sprite sheet in the actual game, as well as normals and depth for the model. This would allow for procedurally generated sprites.

Obviously this kind of graphics has its drawbacks. You can’t rotate the camera freely to any angle. Instead you can only view the world at only 8 fixed angles, 4 of which resemble an isometric view (Although it isn’t true isometric). I did a bit of research and a guy on youtube has implemented a similar idea here. Very cool effect, he uses high poly pre rendered assets where ambient occlusion has been baked in.

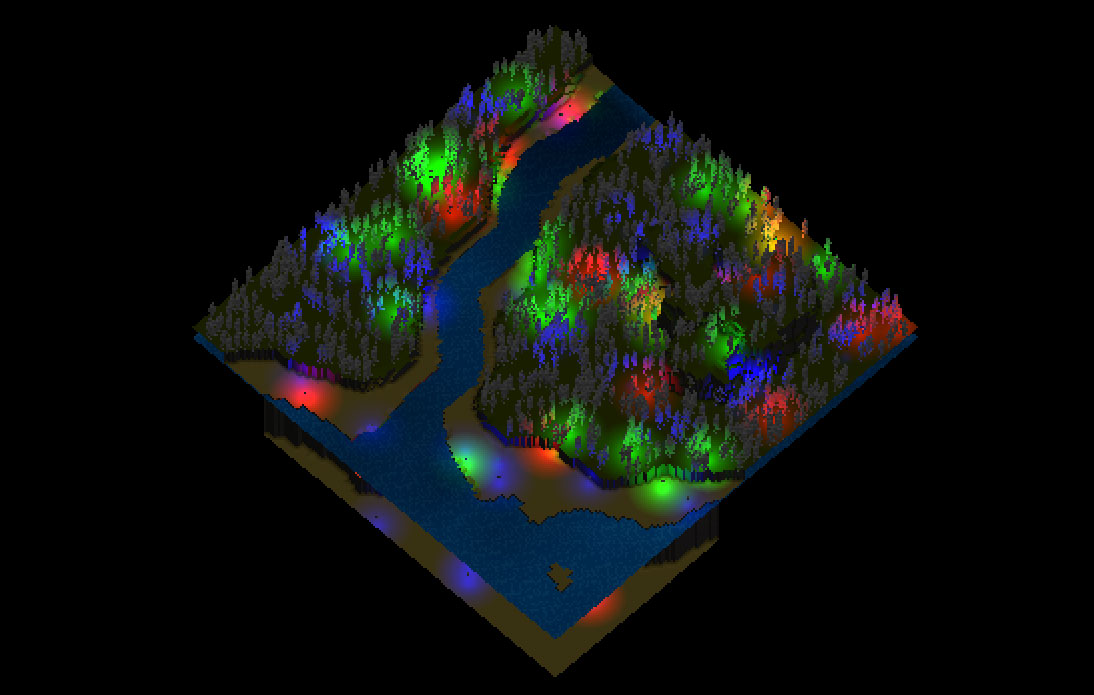

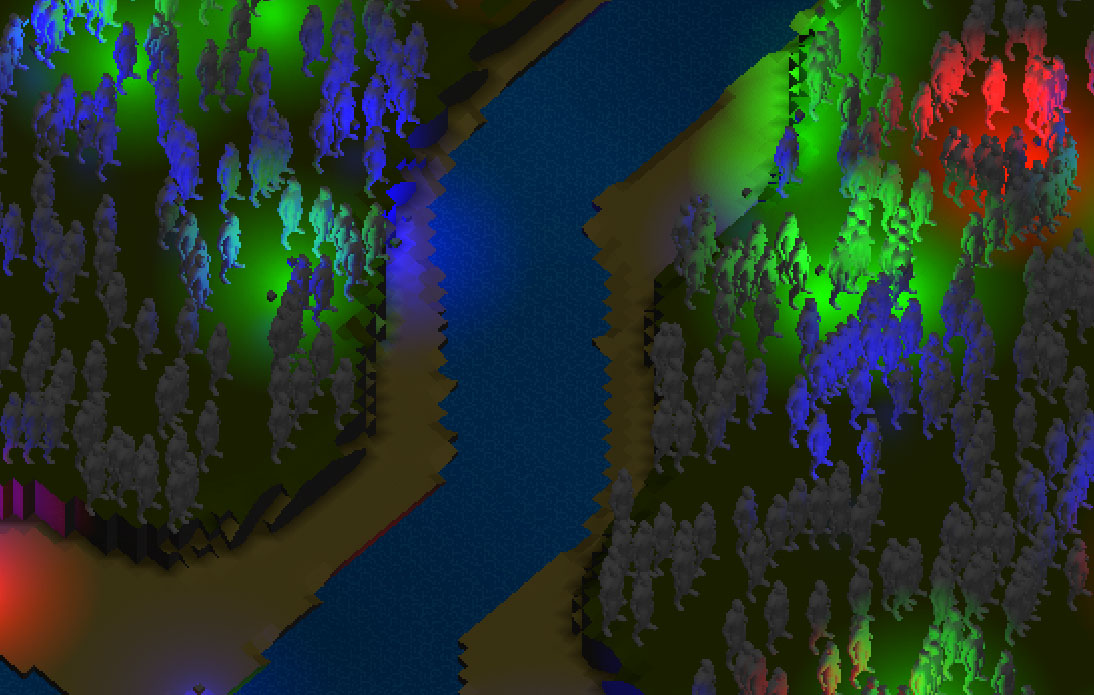

Stress Testing

Here is a stress test with 1000+ models and 200+ lights. The engine maintains a solid frame rate (on my 5 yr old macbook pro).

I’m basically taking the common graphics optimization technique referred to as ‘imposters’ to a next level, imposters are where a 2d image replaces 3d geometry at a certain LOD. Say for example in a video game, a complex tree model would get replaced with an image of the tree when it’s viewed from far away. Difference is, these imposters look exactly the same as the original model. By storing depth and normals they can be lit as if they were 3d in realtime.

Behind the scenes the engine renders a model 8 times from different angles. Then it puts the data onto one single texture atlas. When the model needs to be rendered, just a single polygon is drawn on screen. The normals and depth for that part of the screen are taken straight from the atlas. In theory, the final output should be no different than having rendered a full 3d geometry, assuming the camera maintains a fixed position. This is just a proof of concept at the moment, I haven’t tested how this really performs compared to normal 3D geometry. I’m not convinced the savings will be that great right now but this rendering method opens doors to other optimisations later on.

For this type of rendering to work, depth is compared using the Y axis in world space. This is different from a typical depth buffer which would hold depth values in window space. The issue is that in a deferred rendering system, when G Buffers are brought together and the scene is lit, world coordinates are calculated directly from the depth buffer. You need world coordinates in order to calculate the distance to light source. That works fine normally but when depth is only worldspace Y axis it’s not enough to do lighting calculation. The solution is pretty simple, I’ve actually done away with the depth buffer and replaced it with a position buffer. Meaning, I store XYZ for each frag in an RGB channels of a texture. This was just a bit of fun, but it might have some use within the game, maybe for trees and for models viewed at a distance.

Further reading

http://blog.wolfire.com/2010/10/Imposters

http://http.developer.nvidia.com/GPUGems3/gpugems3_ch21.html

https://software.intel.com/en-us/articles/impostors-made-easy